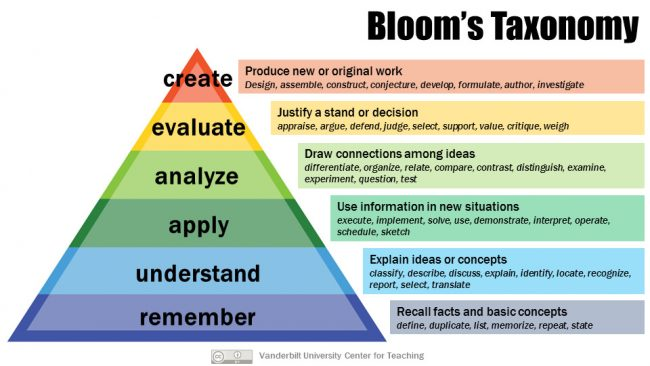

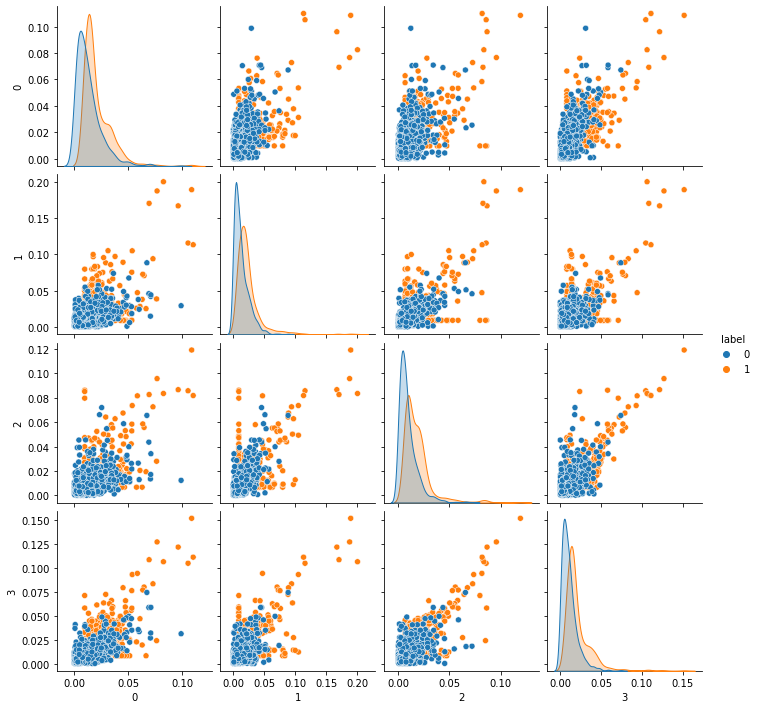

Users’ understanding queries

Published:

Jul 2020 - Sep 2020: Users’ understanding queries

Published:

Jul 2020 - Sep 2020: Users’ understanding queries

Published:

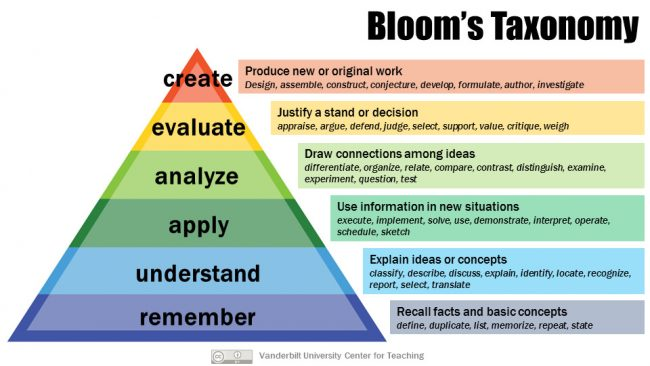

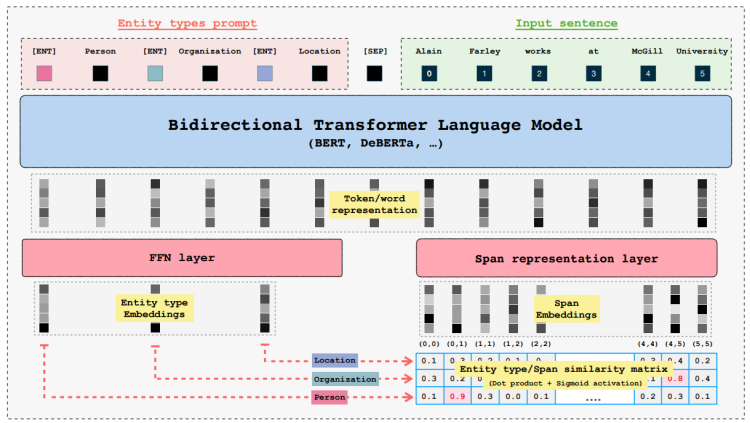

Apr 2021 - Jun 2021: NER for commands extraction

Published:

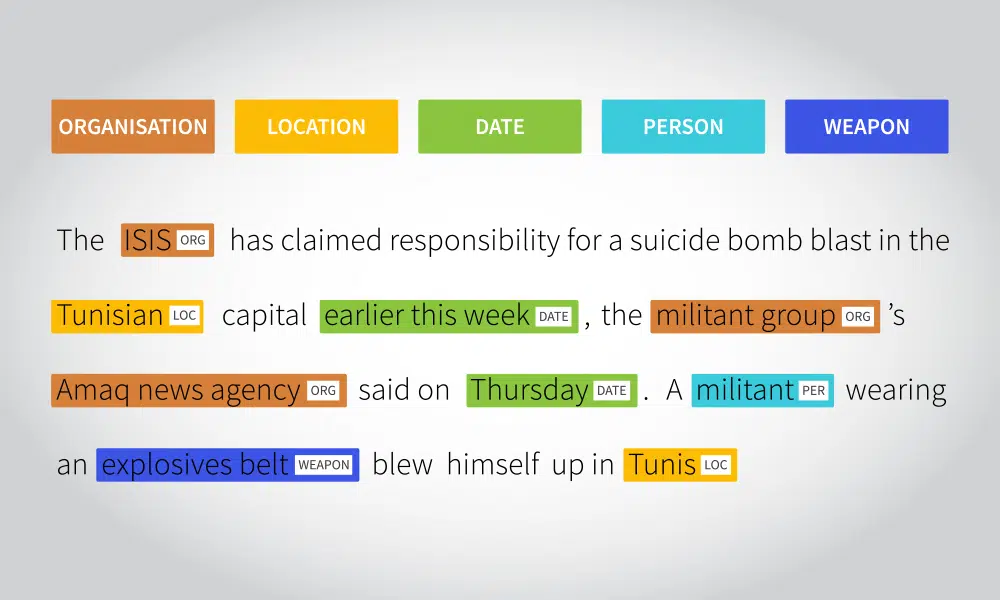

Feb 2021 - Sep 2021: Financial Data Generation

Published:

Oct 2021 - Dec 2021: Financial Data Generation

Published:

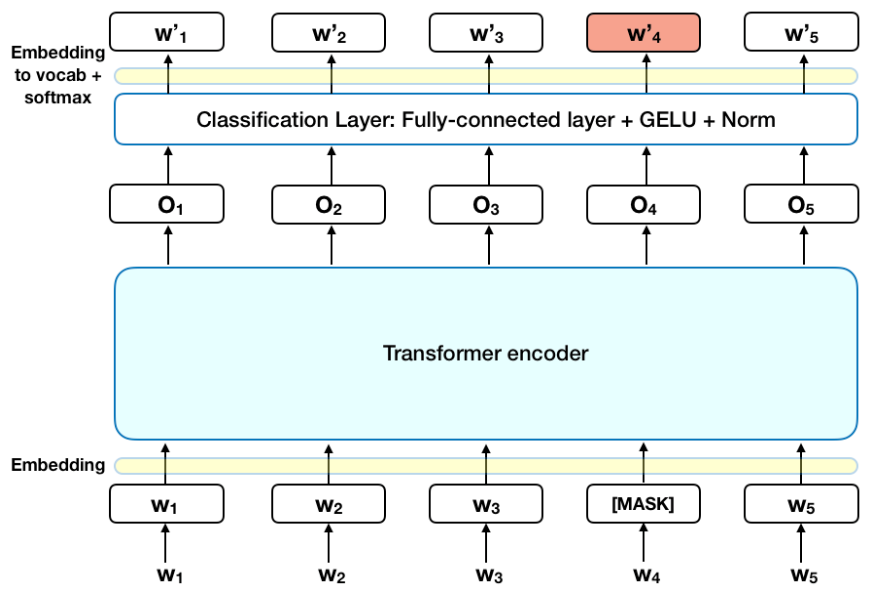

Feb 2022: Continual Self Supervised Learning using Distillation and Replay

Published:

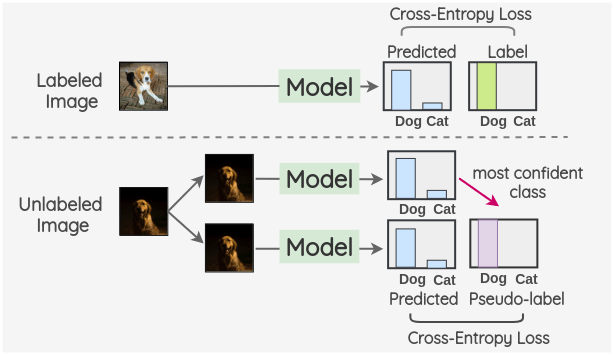

Semi Self-Supervised Learning: improving the performance of self-supervised learning models, especially in scenarios where only a small amount of labeled data is available

Published:

Developing a Machine Learning Algorithm for Accurate Counting of Roof Types in Rural Malawi Using Aerial Imagery

Published:

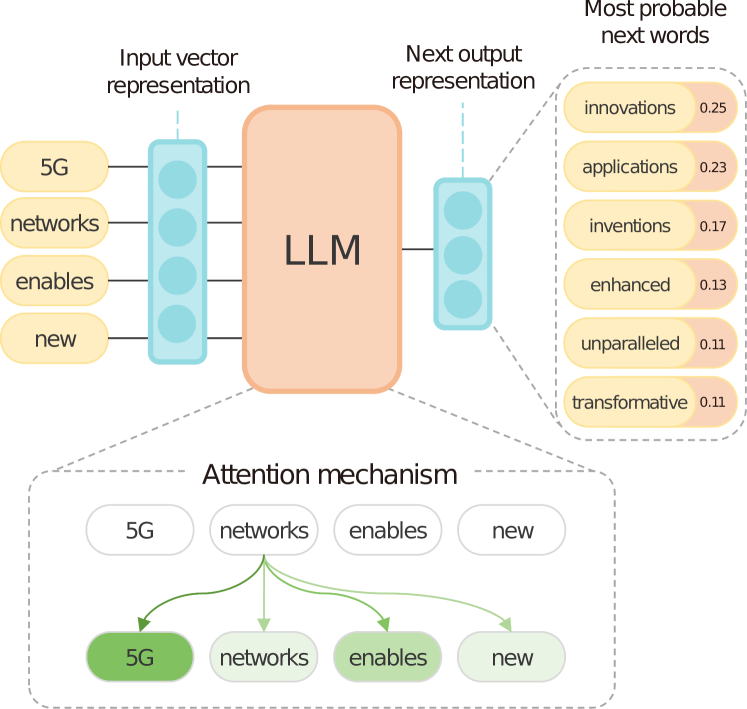

Enhancing the Accuracy of Large Language Models on Telecom Knowledge Using the TeleQnA Dataset

Published:

Sept 2024: Fine-Tuning GLiNER for Enhanced Location Mention Recognition in User-Generated Content

Published:

Oct 2024: Discursia - AI-Powered Language Learning App for Conversational Skills

Published:

Dec 2024: Dikoka - AI tool for analyzing complex historical documents using LLMs and RAG

Published:

An AI-powered platform exploring African history, culture, and traditional medicine, fostering understanding and appreciation of the continent’s rich heritage.

Published:

Feb 2025: GraphRAG-Tagger - End-to-end toolkit for extracting topics from PDFs and visualizing connections for GraphRAG

Published:

Feb 2025: Lightweight BERT-based model for identifying 200 languages, optimized for CPU and deployment

Published:

Mar 2025: LangGraph AgentFlow - Python library for automating multi-step AI agent workflows using LangGraph

Published:

Mar 2025: LLM Output Parser - Python tool to reliably extract JSON/XML from unstructured LLM text outputs

Published in -, 2022

This work proposes an efficient solution for detecting and filtering misinformation on social networks, specifically targeting misinformation spreaders on Twitter during the COVID-19 crisis, using a Bidirectional GRU model that achieved a 95.3% F1-score on a COVID-19 misinformation dataset, surpassing state-of-the-art results.

Recommended citation: Alex Kameni, 2022

Download Paper | Download Slides

Published in -, 2022

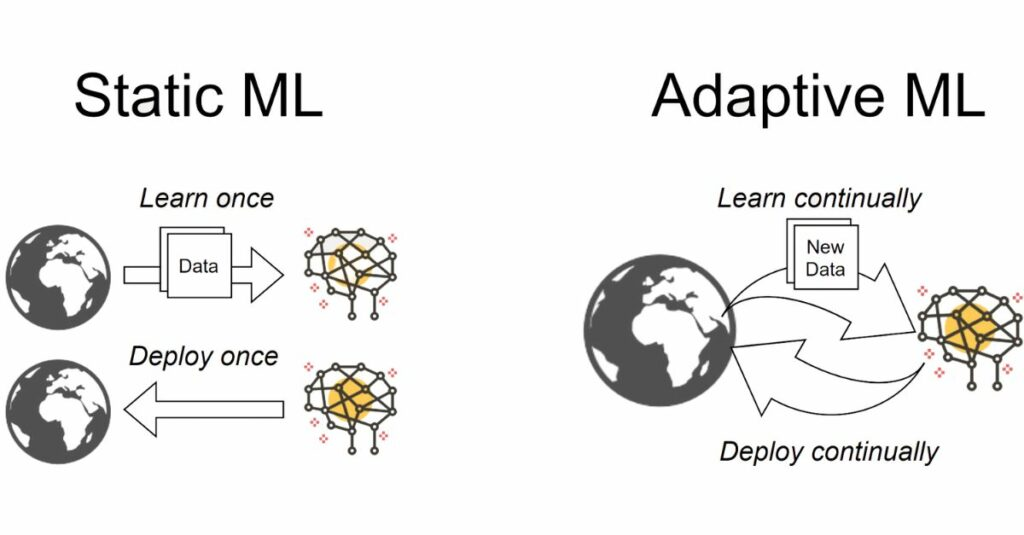

This study presents a framework for continual self-supervised learning of visual representations that prevents forgetting by combining distillation and proofreading techniques, improving the quality of learned representations even when data is fed sequentially.

Recommended citation: Alex Kameni, 2022

Download Paper | Download Slides